Apr 12, 2023 · 5 min read

Batch computing and the coming age of AI systems

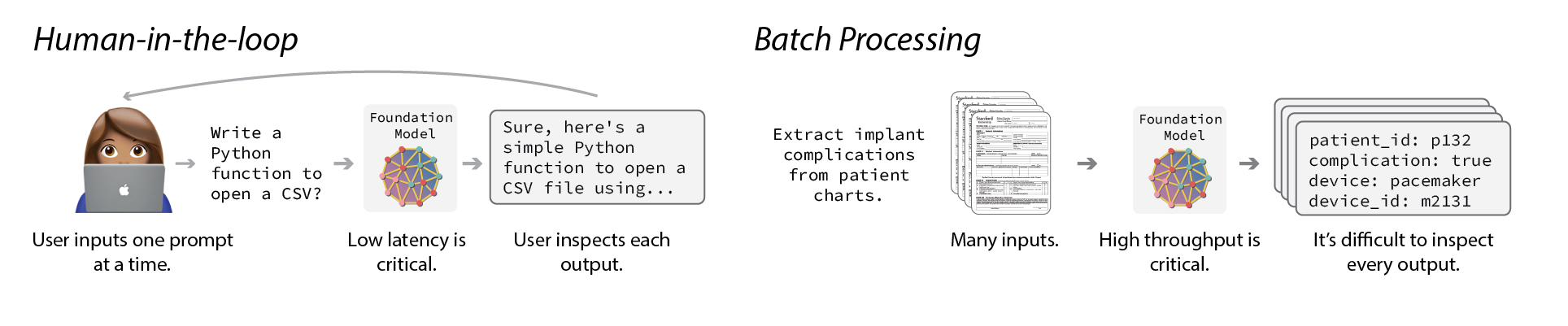

There’s a lot of excitement right now about human-in-the-loop systems supercharged by foundation models including chat assistants (ChatGPT), word processing (Microsoft Office), graphic design (Stable Diffusion), and code editing (Copilot). However, these systems only begin to scratch the surface of the role foundation models could play in our world. There’s another set of workloads, which we refer to as batch processing tasks, that require processing many inputs without a human-in-the-loop. If foundation models could be applied reliably and at scale, they could power complex batch processing tasks that impact every part of our society - from how we deliver healthcare, to how we do science and understand our economy.

This divide between human-in-the-loop and batch computing already exists in software today. There are human-in-the-loop systems like search, which are highly visible and impactful to everyday users, but only account for a fraction of the role computing plays in the world. Much of our economy depends on batch processing systems that run at scale behind the scenes, without humans in the loop. Today, this includes systems for everything from processing financial transactions to managing supply chains to analyzing scientific and health data. Understanding these two settings can help us frame where foundation models are and where they could go.

Before foundation models, tackling batch processing tasks with AI was impactful but took significant time and expertise. Some examples from our own work:

- In healthcare: we built systems to do medical device surveillance and understand whether implants were systematically failing veterans. We also contributed to systems that help diagnose rare genetic disorders in children.

- In human rights: we worked on systems to assist in the fight against human trafficking.

- In science: we developed a system for organizing huge swaths of scientific literature in geology and beyond.

Each of these systems took PhD-decades to build. Our recent work suggests foundation models could dramatically reduce the time and difficulty of building AI-powered batch processing systems like these. This democratization puts amazing technology in the hands of the amazing people of the world who can use it to improve all of our lives. From simplifying observational studies in medical research to automating accounting processes for businesses, we’re incredibly excited about the impact foundation models could have when applied to batch processing tasks.

However, using foundation models effectively in batch processing applications brings a new set of challenges that aren’t present in human-in-the-loop applications. Unlike chat and autocomplete, which have low data volumes per user and depend on low latency responses, batch processing applications have high data volume and need to be optimized for high-throughput data processing. This is particularly important given the enormous compute and memory requirements for serving these models. Moreover, because of the volume, batch processing systems must maintain high quality without humans in the loop manually reviewing the output of each operation. These differences point to unanswered research questions and room for improvement.

We’ve already made some headway on these challenges with efficiency and quality in applying foundation models to batch processing - and our early experiments suggest there’s a long way to go.

- Systems improvements: We think there is significant room for systems improvements to improve efficiency for exact inference in batch processing. In FlexGen, we were able to achieve 100x higher maximum throughput for foundation model inference in resource-constrained settings by optimizing offloading strategies for high-throughput instead of latency.

- New trade-offs: With new strategies for querying foundation models, we can explore new quality and accuracy trade-offs for batch processing and achieve asymptotically better performance. In Evaporate, we showed that instead of directly extracting data with foundation models, we can generate code that does the processing for us. This leads to foundation model compute costs that are fixed with respect to the size of the data to be processed. Our study on 16 real-world evaluation settings showed we could achieve 12.1 F1 improvements in extraction quality with 110x reduction in tokens processed by the foundation model.

- Quality and validation: Although foundation models have impressive capabilities across a broad set of tasks, they fail in surprising ways. When applied at scale without a human-in-the-loop, these failures could go unnoticed if users don’t perform careful evaluations. The issue is, existing validation tooling is not suited to FMs. In traditional machine learning, the cost of collecting training data outweighs the cost of collecting validation data, but since FMs don’t require training data, evaluation is now the rate-limiting-step. In Meerkat, we’re making FM evaluation more accessible and efficient by creating new error analysis interfaces and asking FMs to evaluate themselves.

We’re just getting started here. Our bet is that enabling foundation models to reliably and efficiently perform batch processing tasks will yield a new class of data systems and impactful applications that we are just beginning to understand.

Acknowledgements

Thanks to Simran Arora, Arjun Desai, Karan Goel, Ce Zhang, Ines Chami, Benjamin Spector for their comments and feedback on this post.