Oct 11, 2022 · 15 min read

Foundation Models are Entering their Data-Centric Era

Chris Ré and Simran Arora

All images for the post are generated by Stable Diffusion and creative prompts: (https://beta.dreamstudio.ai/dream).

The rise of foundation models like GPT3, CLIP, DALL-E (2), Imagen, Stable Diffusion, and many more has been amazing and a lot of fun. These artifacts seem to offer magical generative and in-context-learning abilities that were hard to imagine even a few years ago. Drawn to AI/ML for the confluence of engineering, math (algorithms/models), and the ability to dream (data/emergent properties of this data/applications), we love this magic! This post explores the idea that these massive technologies are commoditizing quickly–models aren’t just the realm of the behemoth corporations--and the value is increasingly in the description of the world and the problem, which means: the data. The consequences of this haven’t been truly worked out yet–and so a lot of this is conjecture.

prompt: "taco cat" (don't take us too seriously)

From an ML point of view, the notion of a task is an absolute basic – we create some training data to specify our task and train to generalize — and accordingly two pieces of conventional wisdom have been critical for decades:

- "garbage in, garbage out" that is that the knowledge provided to the model via the data/features determined your success or failure.

- "Too many parameters leads to overfitting" For the last two decades or so, to develop models that generalized, sparsity was all the rage! Part of the conventional wisdom to reduce overfitting was that sparse models had fewer parameters and so would generalize better.

These maxims have a lot of truth. Nevertheless, they can also both be a bit misleading.

Foundation models are changing our understanding of a task as they are trained on broad data and applied to many tasks. These models are being applied out-of-the-box (no task-specific training), even when the users themselves only have a vague understanding of the tasks they are after! These models can be controlled with natural language, an interface that is propelling their use by domain experts who want to quickly experiment with the magical capabilities in new settings. Users aren’t curating a task-specific training dataset as the first step in this exploratory process — they’re playing, dreaming, and rapidly iterating on the ideas they dream up! With foundation models we want to understand how they transfer to a whole host of tasks, and tasks we haven’t yet anticipated.

To make progress on the next wave of AI, it may be helpful to revisit the limitations (and wisdom) of the ancient maxims. In this post, we'll start there, then talk about changes we see with FMs, and finally discuss how we see FMs fitting in alongside traditional approaches.

Garbage in, Garbage Out – no more?

Taskless foundation models are exploding and a lot of this to date has been about the model architecture and engineering, but we are beginning to see these converge and the data arise as the essential and differentiating aspect- is there any precedent to this? Well we’ve seen the pendulum between model and data-centric progress swing back and forth a few times in supervised ML!

While working on a series of projects in the last half of 2010s, feature quality was key. Features in the old models eatures were a vehicle to encode domain knowledge. Features were brittle and handled a practitioners needed to host of low-level details about how to represent this information for more stable and reliable prediction.

A lot of the success of deep learning was that people were pretty bad in these roles. With the deep learning revolution in full swing, new models were coming out by the day on arxiv–it was awesome! These models took previously manual operations like feature engineering and automated them entirely. Models were so good that deep learning showed remarkable success in featurizing raw data like text and images. It was an unbelievable productivity boost. However, the models weren’t perfect and knowledge about the world still seemed important.1 So how does it get into the model?

Well we saw that training data was where users were effectively injecting knowledge, explaining their applications, and interfacing with the model––a process that was happening in the intellectual dark of night— with no tools, theory, or abstractions! Our idea was that they should be able to have some basic programming abstractions for their data and so was born the Snorkel project (and then company). Intellectually, this was our entry into data-centric AI and weak supervision. Two important lessons that we drew from here:

- Once a technology stabilizes the pendulum for value swings back to the data. In this case, deep learning technology commoditized with the advent of tensorflow, pytorch, mxnet, theano, etc. – but the description of your particular problem did not given the wide range of data distributions, task specifications, etc. As a result, success or failure was how well you brought the relevant information to the model.

- We can (and need to) handle noise. Fundamental math and engineering helps to do this in a principled way. It’s challenging for users to perfectly express their knowledge in the training data and different sources of data can have varying qualities. While working on the underlying theory of weak supervision, we realized that models could learn a lot from noisy data (not all garbage was bad!). That is, we couldn’t take garbage in–but we also didn’t have to be fastidious neat freaks about our data either. Snorkel’s papers developed a statistical theory that could help us cope with noise. Of course, as card carrying academics, we worried a lot about the optimal way to handle noise and spent more than a half a decade developing it and building on other amazing work. Alex and team recognized something more significant: Snorkel changed how you build applications. This viewpoint is what spawned snorkel the company and wide interest in what’s now called data-centric AI.

prompt: "noisy image". See anything cool in the noise?

So more succinctly, data encodes your problem and your analysis–when technology commoditizes the value remains in the data. So it’s not that garbage is great, it’s that it’s too categorical of a distinction. Data often isn’t garbage or not, it’s about exploiting it in the maximally useful way.

Foundation models are trained on massive amounts of data and applied to a wide range of tasks, raising new challenges in data curation. As the models/architectures commoditize, we need to understand how to curate the massive amounts of data (efficiently!) such that the models are generally useful.

Too many parameters leads to overfitting?

Why do we see magical in-context behaviors emerge? How do modeling choices (architecture and algorithms) give rise to it? Do the magical behaviors of LLMs come from magical model-recipes?

The rough version of machine learning generalization theory a decade or so ago was that if a model was parsimonious (i.e., it wasn’t able to fit too many spurious features) then it would generalize. One can make rigorous versions of this statement, and these were major accomplishments of theoretical ML like VC dimensions, Rademacher complexity and more. Somewhere along the way we started to act as if a small number of parameters was necessary for generalization too... but it isn’t! Overparamterization was a major concern, but now we have large models as a counterexample: these large models (with parameters more than the number of data points) can fit a huge number of crazy functions, but they still generalize (even with random labels).2

Our views around overparamtrization were misleading us and recent insights opened up a new direction. We see magical behaviors emerge in these larger models, but the next view that caught on was that only very specific architectures trained on machines that few people have access to give rise to these properties.3 One direction in our own work and many others is to try to get simple, classical models to perform these magical behaviors. Our recent state space models build on a few decades of signal processing (so they fit the classical bill) and recently we showed they had in-context abilities. More fascinating to me, even classical BERT bidirectional models have in-context abilities! We're sure there are many other folks writing papers here, please send and we’ll read and cite them. Our point is only that these magical properties of in-context learning are all around us! That is, the universe is an even more magical place than we realized... or more soberly, perhaps humans are just terrible at understanding conditional probability.4 Either way, it’s fun.

A lot of things seem to work well in the regime of large models. The magical behaviors in foundation models appear stable and commoditizable, and we see data arising as a differentiating aspect.

Might now be the data-centric age for foundation models?

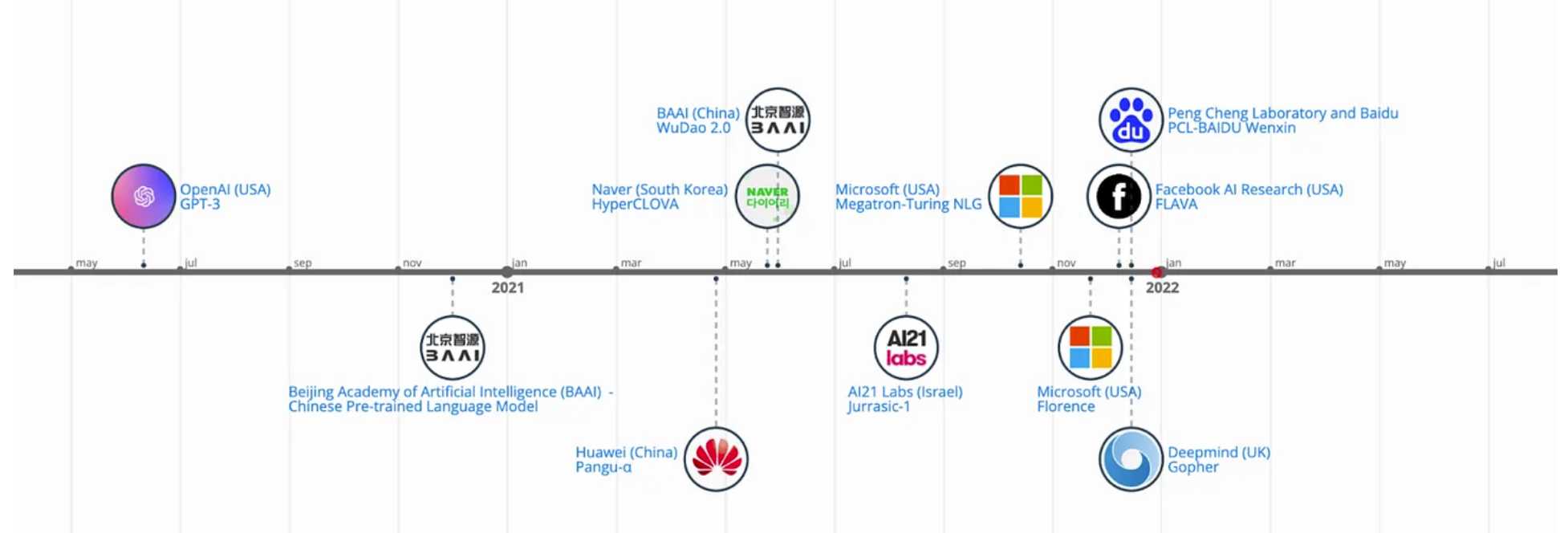

Are we repeating the data-centric shift of supervised learning? I.e. are models and engineering becoming commoditized?

Commoditizing Models and the Rise of Open Source We're seeing foundation models commoditize and become applied–it feels a lot like deep learning. To us, the greatest proof that these models are commoditizing is the great rate that they are available. OPT, etc. Two main forces: people want them (stability, etc.) and large corporations can use them. Open source didn’t take off because lots of hobbyists want it, it was because companies beyond the largest corporations and governments decided they needed this stuff (cf python’s rise). So much of what’s in those models are ideas pioneered by smart students! This one seems to be in everyone’s interest economically and due to the fact that nation states have interests. It’s awesomE!

Waiting fot the next mega model from the newest megacorp to drop? P.C.

What’s becoming most differentiated? The data! So these tools are increasingly available, but honestly foundation models don’t always work great out of the box. So how do you fix it? How do you deploy it? Wait for the next mega model from the newest megacorp to drop? That’s one strategy! We applaud your nihilism! Will that model be open-sourced, hard to say – what about applications of foundation models on private data that can't be shipped to an API? Will the model be 100 Trillion parameters – how many users can access and use it? What is the model trained on – models are mostly trained on public data (or rather they should be, ehm, copilot we still love you.)...5 so there is little guarantee that it will know about what you care about? So how do you preserve the magic of foundation models and get them to do what you want? You have to curate data for foundation models more effectively (data is critical!) or figure out how to use the great open source models at test-time (shaping inputs and contextual data at test-time is critical!):

-

Data-curation and Data-Centric Scaling Laws? Prediction: smarter dataset collection methods lead to smaller and better models. We should point out the amazing scaling laws papers that really opened our eyes: the original OpenAI paper which started the scaling-law investigations, and the Chinchilla paper from DeepMind. This latter paper showed some corrected rates for how much training data you need given a certain number of parameters. While we have a kind of default reference architecture (transformers!), the observation that strikes us as odd is that the number of tokens is a bit of a crude proxy for the information content of data... Our experience with data is that it’s incredibly varied in topic and quality. Our hunch is that what should matter is some sort of number of actual bits of information with overlap and degree – information theoretic concepts like entropy seem to be relevant to make both small and big foundation models more awesome.

-

Knowledge Injection and Computation at Test-time Foundation models don’t always work out of the box, but using test-time compute in new ways can make a big difference! With the cost and lack of privacy in using closed-source model APIs, we recently showed an open source foundation model that is further 30x parameters smaller could beat OpenAI’s closed source model on the canonical benchmarks by using the small model effectively at test time — the method is called Ask Me Anything (AMA) Prompting. At test-time, users control foundation models via prompts, or natural language descriptions of their task of interest, and the prompt design can have a huge impact on performance. Getting the prompt exactly right is tricky and painstaking and so AMA proposes to use a bunch of noisy prompts of varying qualities, and to use statistical theory to cope with the noise. AMA is inspired by many: Maieutic Prompting, Reframing GPT-k, AI chains and others! The point is that we can use test-time compute in new ways -- we don't need to prompt a model just once! It’s not just about curating data at train-time, but also about shaping input data and contextual data at test time.

prompt: "really small AI model"

From AMA we saw small models already had the reasoning abilities to excel on many tasks, and the key value of larger models appeared to be the memorized factual knowledge. Small models did not do well on facts, how should we bring in data and knowledge to fix this? It’s weird that we store facts in neural networks using SGD to smash them into nebulous in floating point values… it seems a lot less efficient of an abstraction than say, a key-value store backed by DRAM. But, what do we know! We do see from the AMA results, that the difference between small and large models is much smaller for temporally changing or domain specialized facts... When we built self-supervised things at Apple, we needed to be able to edit the facts we returned (for business reasons), and we need to fit in with the rest of the software tools that run a service. So, making the model call out to indexes was important. Time will tell if these are compelling reasons to use these style of models or if someone will think of a more clever way to use them?

Where does this lead us? FMs alongside traditional approaches. Hypothesis: data-centric progress at both ends: exploration and deployment! For rapid iteration and task-agnostic workflows — the explore phase — we make our generalist, off-the-shelf foundation models more useful using data curation/test-time strategies. On exiting the explore stage and armed with a clearer task definition, users will ultimately use data-centric AI and training data curation (so your own data is critical!) in the Snorkel style to distill, combine, and correct the output of foundation models for training specialist deployment models - for example by using and combining multiple prompts and/or foundation models to train smaller, faster “specialist” models that are actually deployable in real production settings–and are more accurate at your specific task, on your specific data!. Or, even using foundation models to improve weak supervision techniques - which some lab and Snorkel folks won an award at UAI for!

Ultimately, getting a model to production eventually is about your data. Our data is the only thing that doesn’t commoditize–and if it does, amazon basics <your business> is right around the corner! We still think that Snorkel’s view of your data is the way to go- you need a programming abstraction, a way to express, combine, and iteratively correct different sources of data and supervision signal, and ultimately, a way to train a deployable model for your ultimate task.

Parting Thoughts

We decided to write this up because it helps us, students, collaborators? Maybe it helps someone we’ve never met–awesome. It’s not that serious.

prompt (left): "a dream of a world with artificial intelligence", prompt (right): "data-centric AI"

Thanks to Sabri, Mayee, Laurel, Avanika and Alex for thoughts on this post!

- Don’t intend to argue about symbolic knowledge versus deep learning. We're an engineer and dreamers, we want awesome demos that work.↩

- For ML theory nerds, yes it’s true that our understanding of generalization here still relies on parsimonious models. For me, Misha’s papers about double descent really opened our eyes to memorization and generalization being compatible↩

- This felt a bit like gatekeeping in the last era, when everyone would talk about all the nebulous magic at big corporations... then you would visit, and be disappointed. Please young students, don’t fall into this trap. YOU HAVE GREAT IDEAS.↩

- This is a bit of a stretch, but Latanya Sweeney’s work about how 87% of the U.S. population is uniquely identified by date of birth, gender, postal code" always stuck with us and seems relevant here…↩

- This is an underappreciated point to me. For years, we were told the data moats at megacorps were the reason they were all safe. Now, a huge number of people can train a model that looks like a pretty nice search engine at home. Pretty shocking!↩